🌟prometheus-operator部署监控K8S集群

下载源代码

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.11.0.tar.gz

解压目录

[root@master231 ~]# tar xf kube-prometheus-0.11.0.tar.gz -C /zhu/manifests/add-ons/

[root@master231 ~]# cd /zhu/manifests/add-ons/kube-prometheus-0.11.0/

[root@master231 kube-prometheus-0.11.0]#

导入镜像

alertmanager-v0.24.0.tar.gz

blackbox-exporter-v0.21.0.tar.gz

configmap-reload-v0.5.0.tar.gz

grafana-v8.5.5.tar.gz

kube-rbac-proxy-v0.12.0.tar.gz

kube-state-metrics-v2.5.0.tar.gz

node-exporter-v1.3.1.tar.gz

prometheus-adapter-v0.9.1.tar.gz

prometheus-config-reloader-v0.57.0.tar.gz

prometheus-operator-v0.57.0.tar.gz

prometheus-v2.36.1.tar.gz

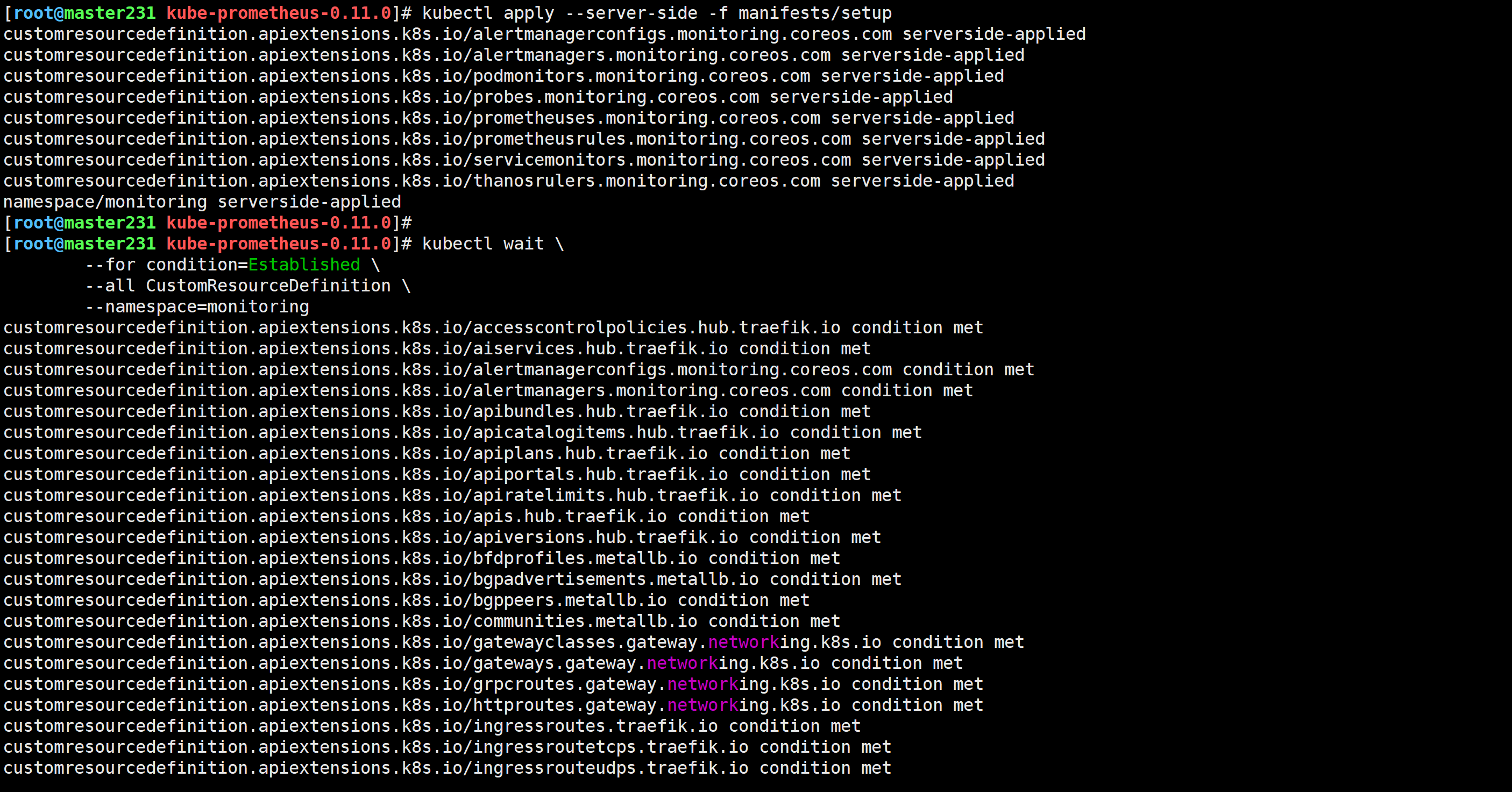

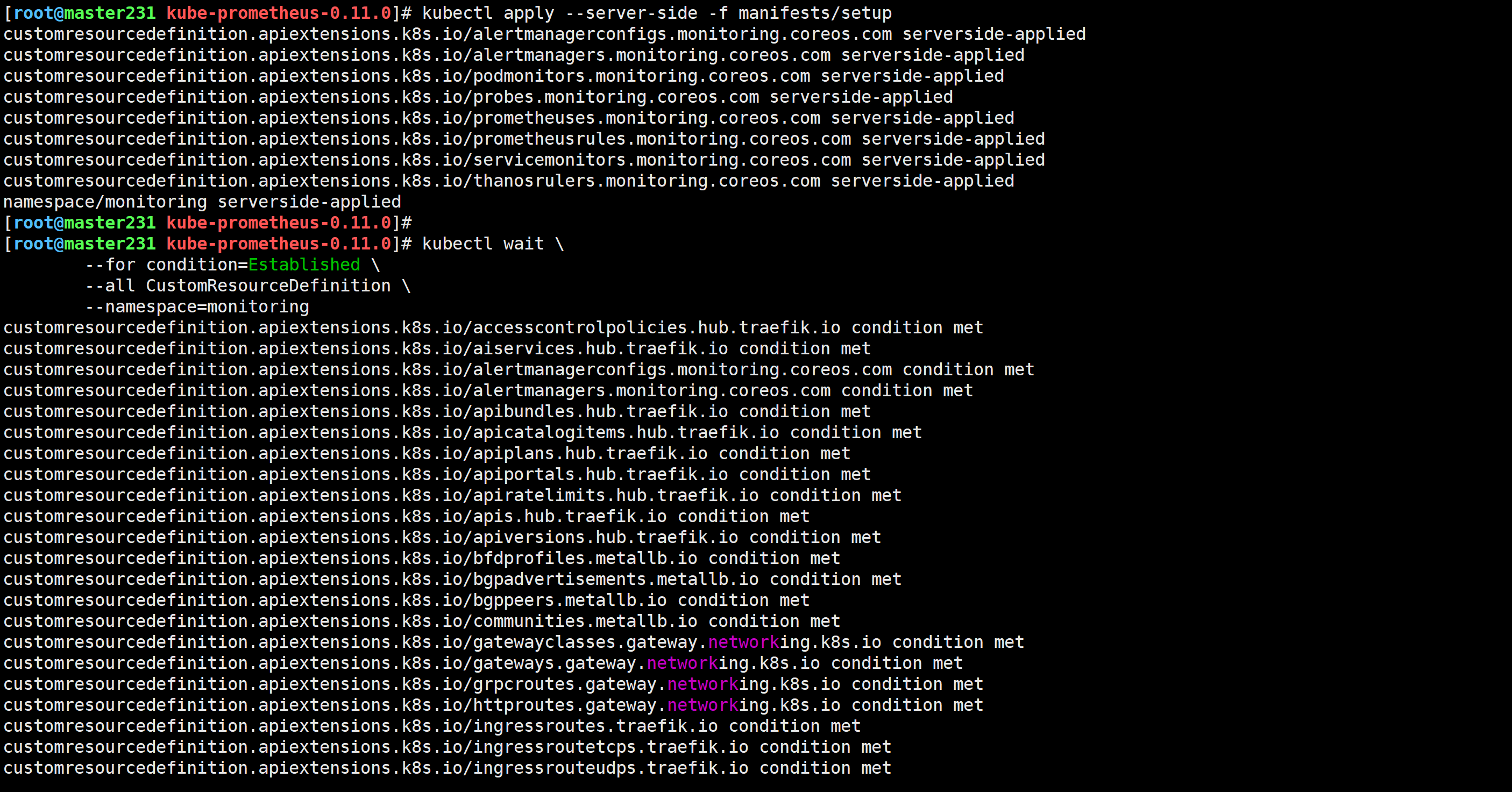

安装Prometheus-Operator

[root@master231 kube-prometheus-0.11.0]# kubectl apply --server-side -f manifests/setup

kubectl wait \

--for condition=Established \

--all CustomResourceDefinition \

--namespace=monitoring

[root@master231 kube-prometheus-0.11.0]# kubectl apply -f manifests/

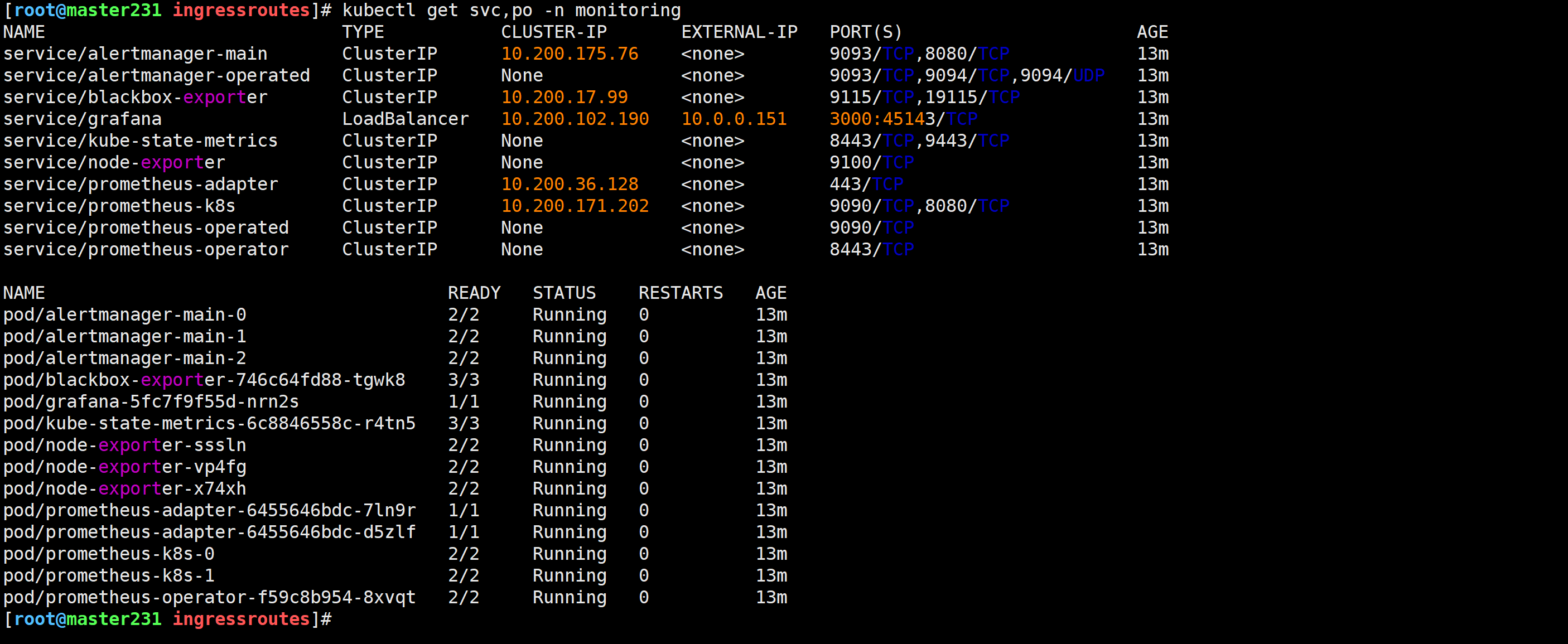

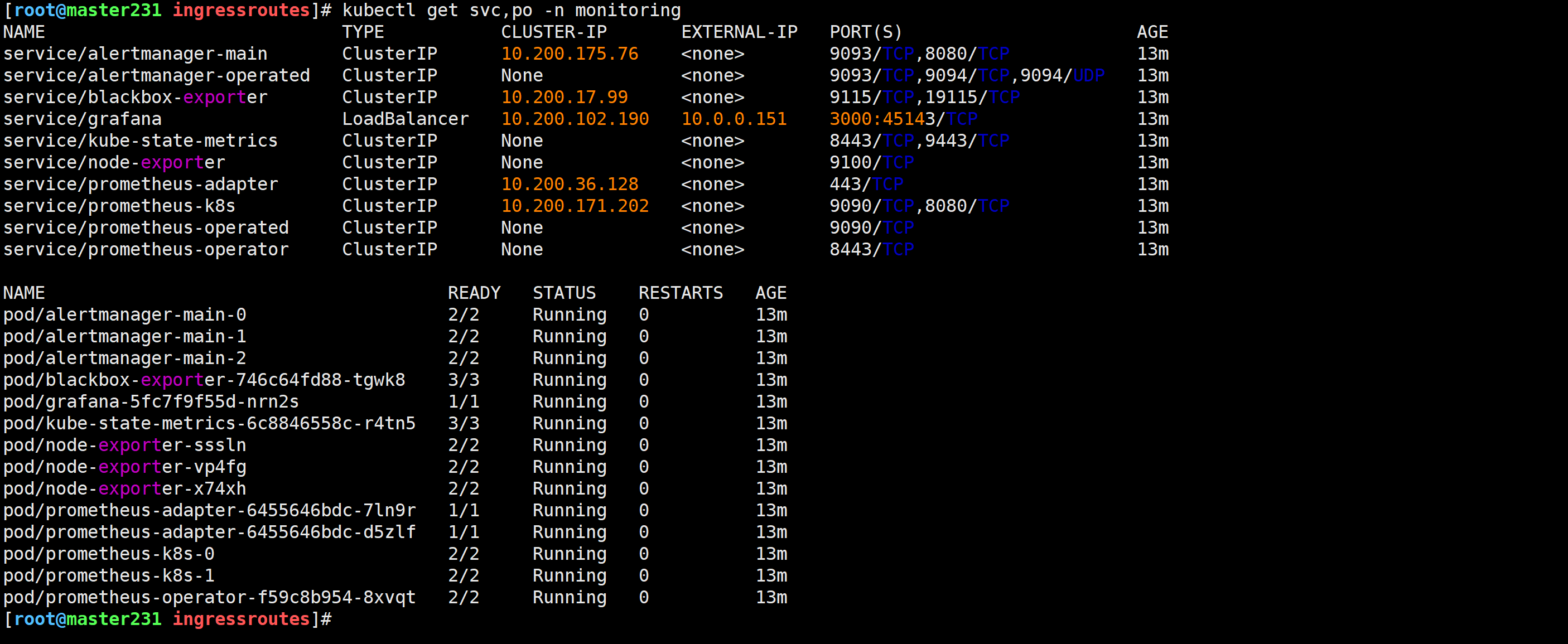

检查Prometheus是否部署成功

[root@master231 kube-prometheus-0.11.0]# kubectl get pods -n monitoring -o wide

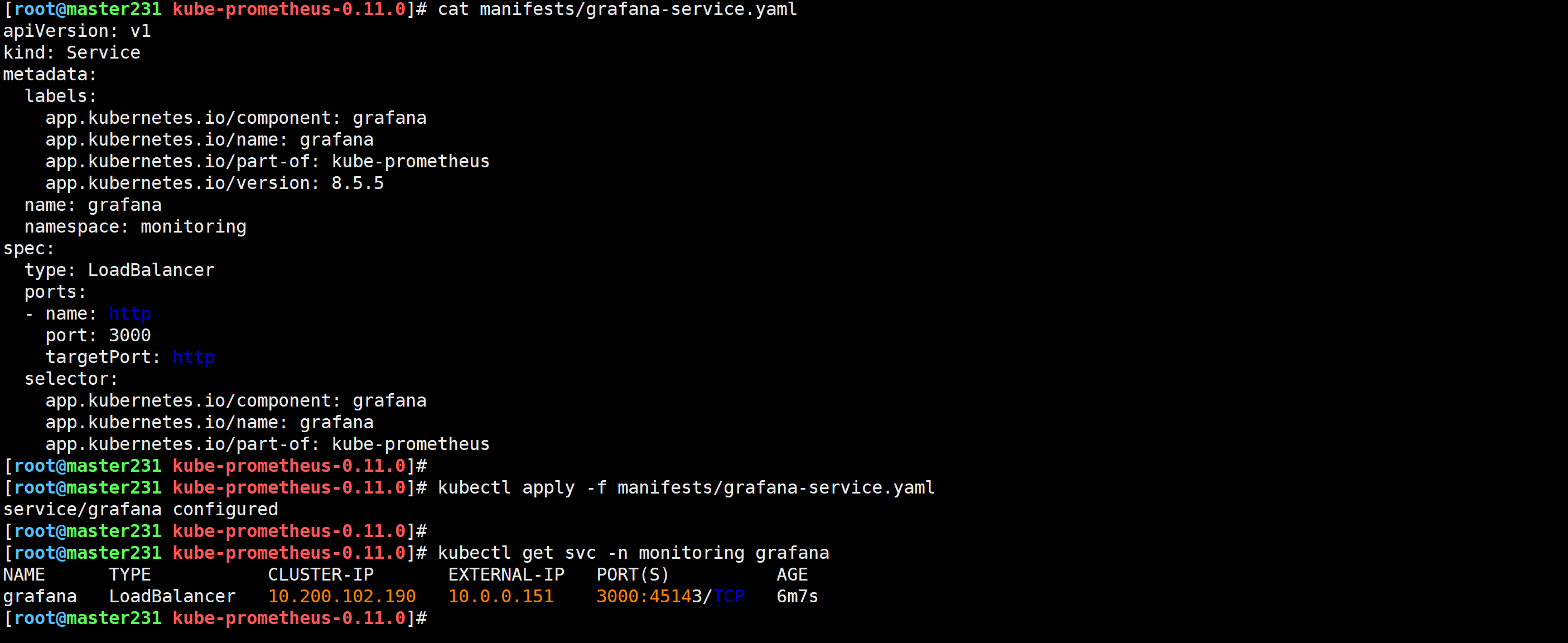

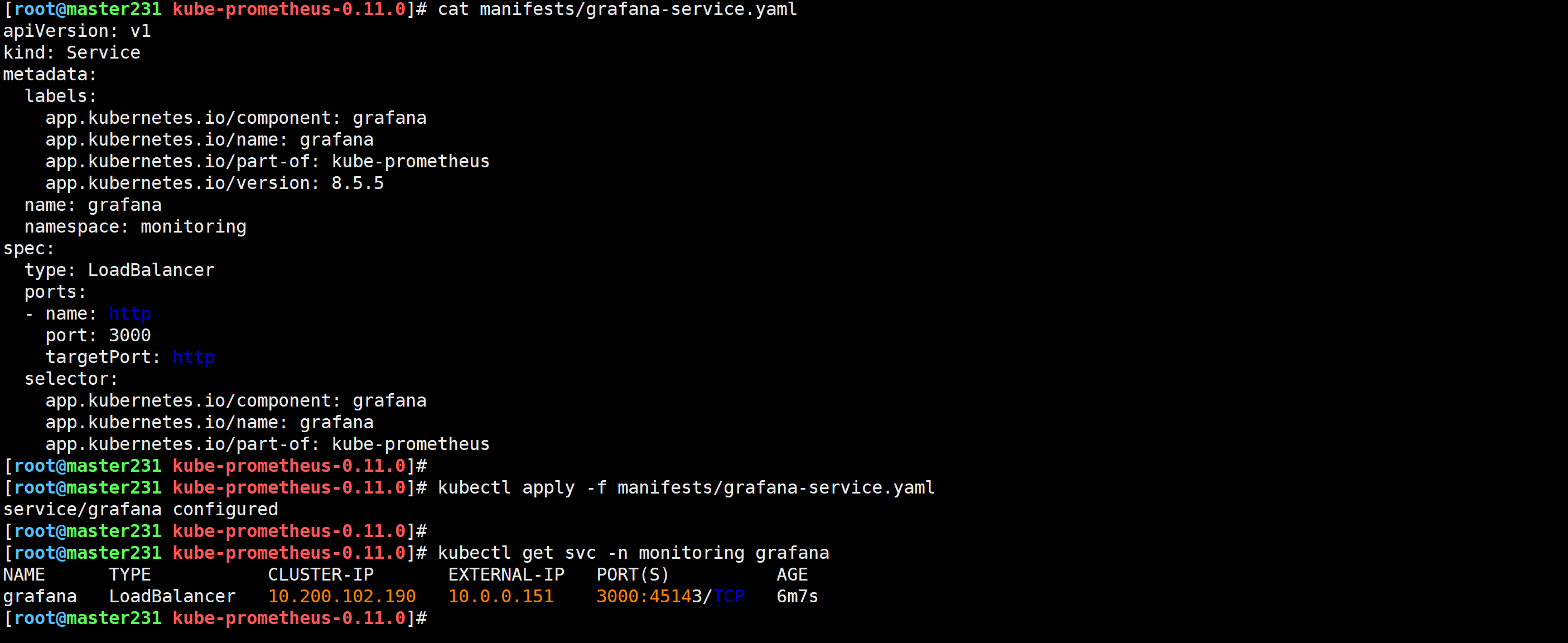

修改Grafana的svc

[root@master231 kube-prometheus-0.11.0]# cat manifests/grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

...

name: grafana

namespace: monitoring

spec:

type: LoadBalancer

...

[root@master231 kube-prometheus-0.11.0]#

[root@master231 kube-prometheus-0.11.0]# kubectl apply -f manifests/grafana-service.yaml

service/grafana configured

[root@master231 kube-prometheus-0.11.0]#

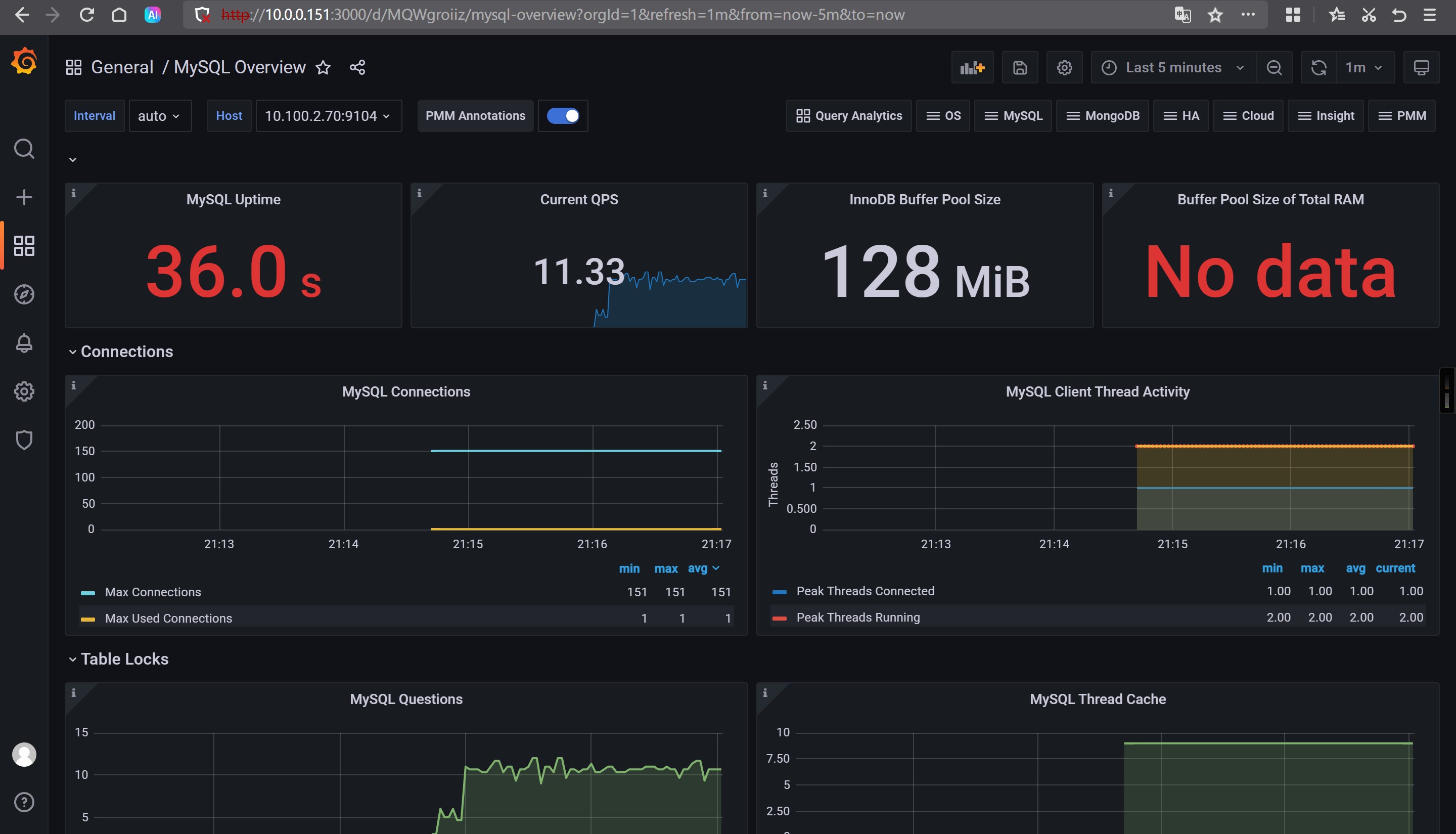

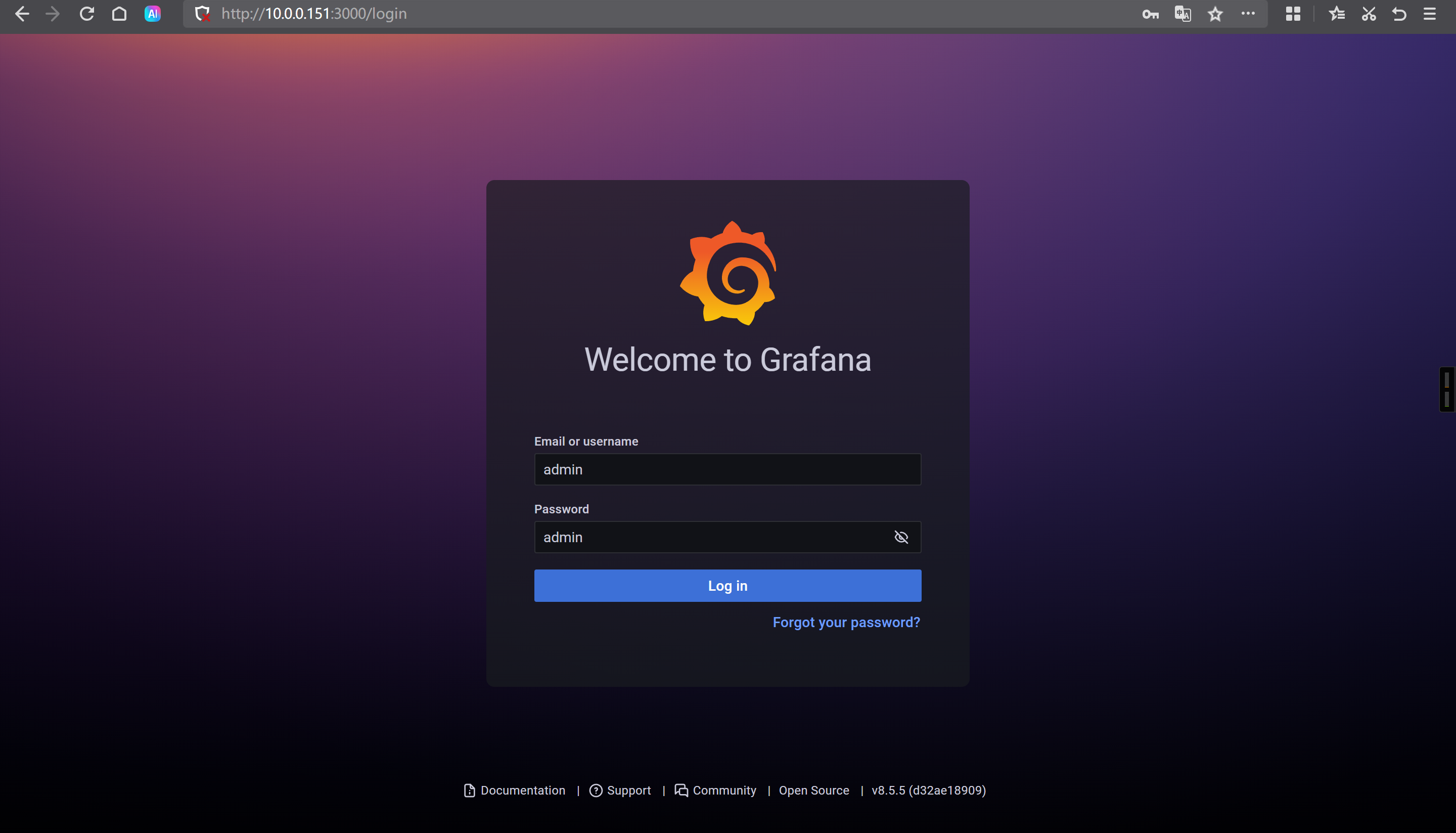

访问Grafana的WebUI

http://10.0.0.151:3000/

默认的用户名和密码: admin/admin

🌟使用traefik暴露Prometheus的WebUI到K8S集群外部

检查traefik组件是否部署

[root@master231 ingressroutes]# helm list -n traefik

[root@master231 ingressroutes]# kubectl get svc,pods -n traefik

编写资源清单

[root@master231 ingressroutes]# cat 19-ingressRoute-prometheus.yaml

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: ingressroute-prometheus

namespace: monitoring

spec:

entryPoints:

- web

routes:

- match: Host(`prom.zhubaolin.com`) && PathPrefix(`/`)

kind: Rule

services:

- name: prometheus-k8s

port: 9090

[root@master231 ingressroutes]# kubectl apply -f 19-ingressRoute-prometheus.yaml

ingressroute.traefik.io/ingressroute-prometheus created

[root@master231 ingressroutes]#

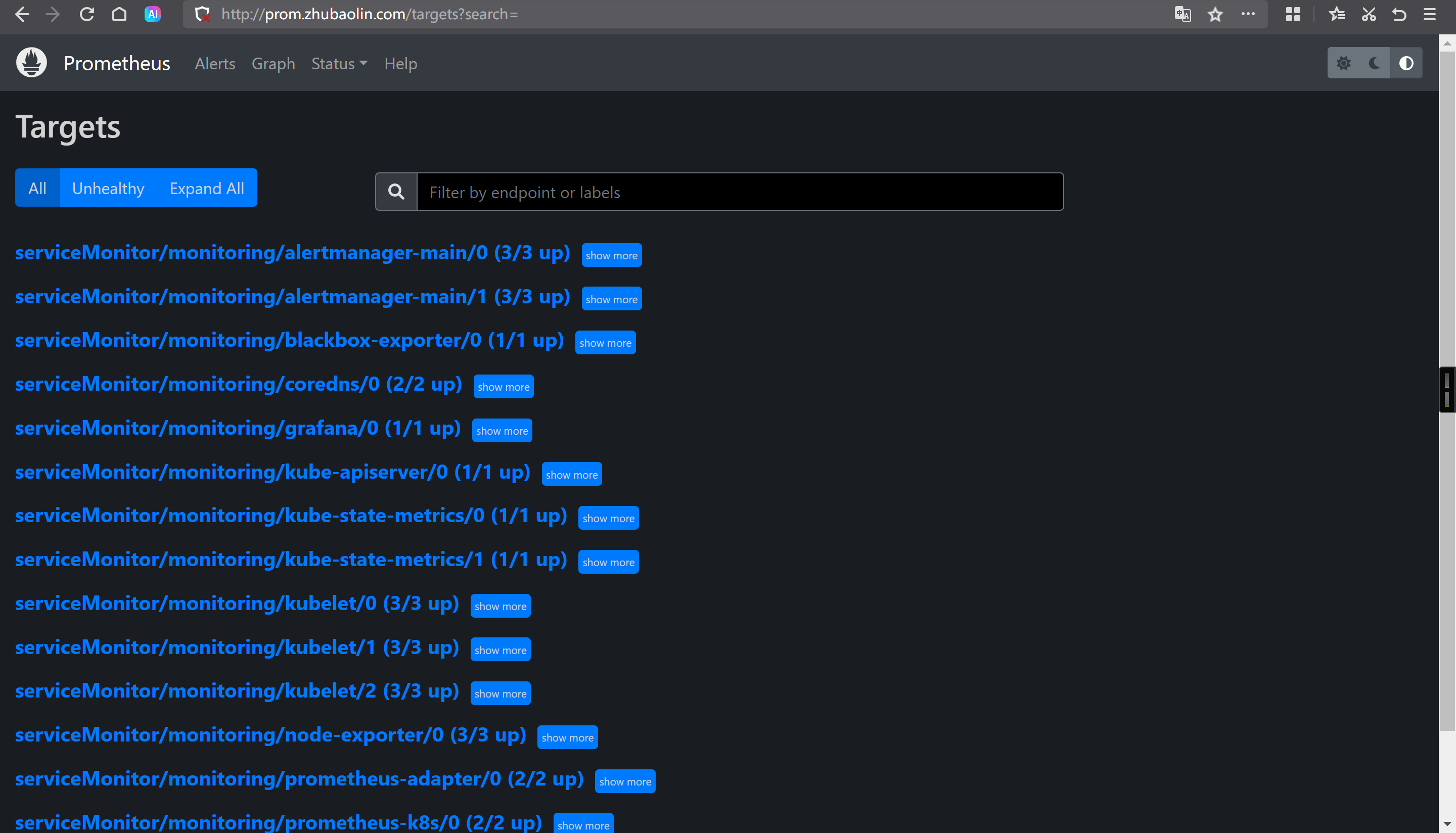

浏览器访问测试

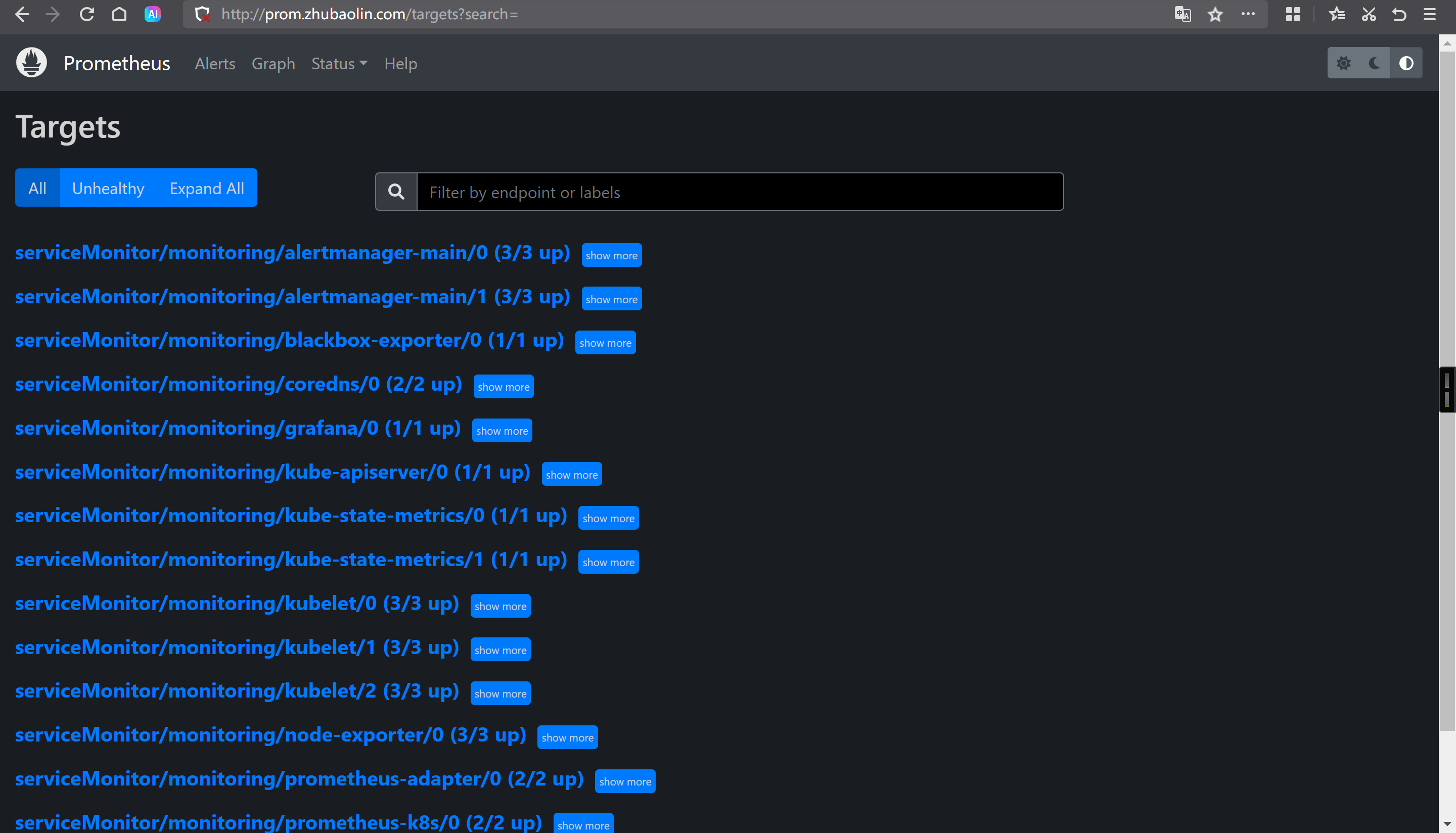

http://prom.zhubaolin.com/targets?search=

其他方案

hostNetwork

hostPort

port-forward

NodePort

LoadBalancer

Ingress

IngressRoute

🌟Prometheus监控云原生应用etcd案例

测试ectd metrics接口

查看etcd证书存储路径

[root@master231 ~]# egrep "\--key-file|--cert-file|--trusted-ca-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

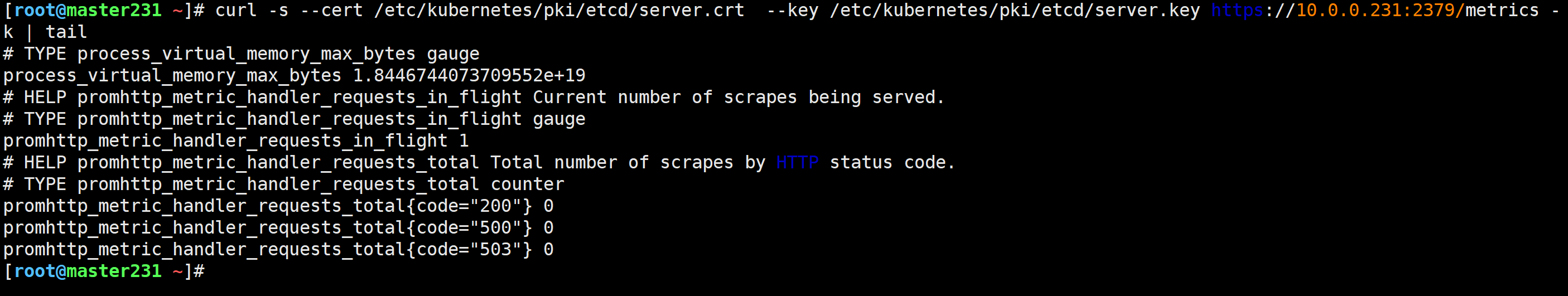

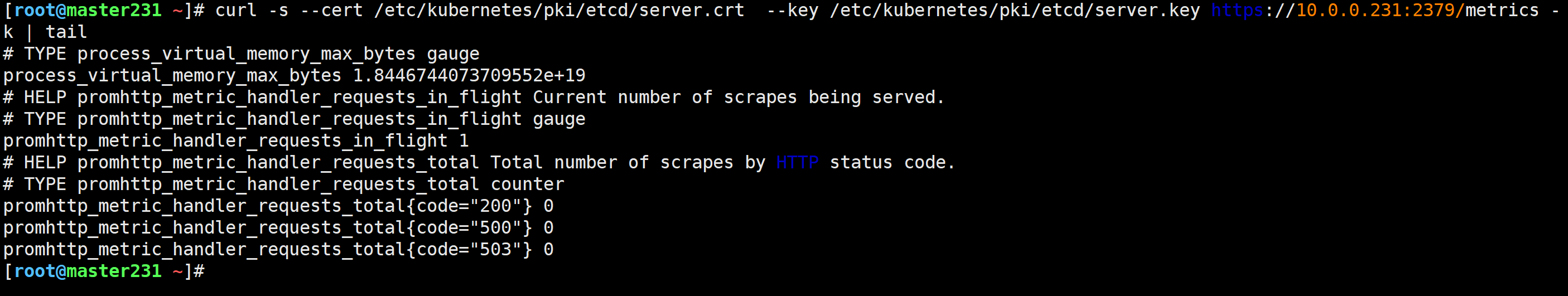

测试etcd证书访问的metrics接口

[root@master231 ~]# curl -s --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://10.0.0.231:2379/metrics -k | tail

创建etcd证书的secrets并挂载到Prometheus server

查找需要挂载etcd的证书文件路径

[root@master231 ~]# egrep "\--key-file|--cert-file|--trusted-ca-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

根据etcd的实际存储路径创建secrets

[root@master231 ~]# kubectl create secret generic etcd-tls --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/ca.crt -n monitoring

secret/etcd-tls created

[root@master231 ~]#

[root@master231 ~]# kubectl -n monitoring get secrets etcd-tls

NAME TYPE DATA AGE

etcd-tls Opaque 3 12s

[root@master231 ~]#

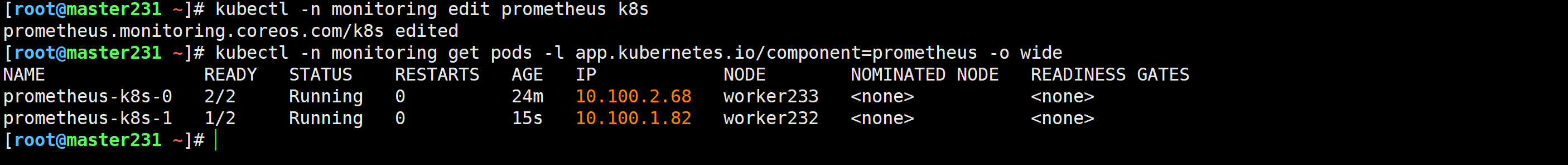

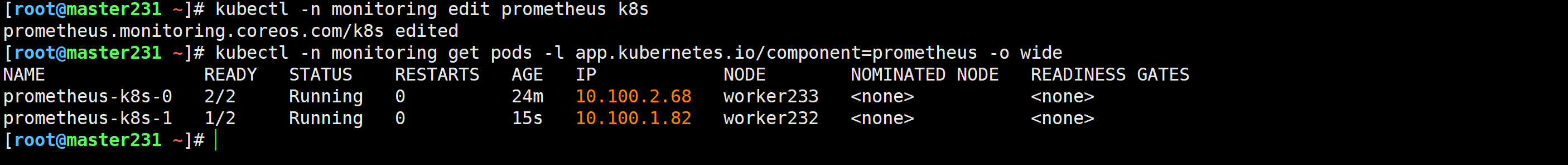

修改Prometheus的资源,修改后会自动重启

[root@master231 ~]# kubectl -n monitoring edit prometheus k8s

...

spec:

secrets:

- etcd-tls

...

[root@master231 ~]# kubectl -n monitoring get pods -l app.kubernetes.io/component=prometheus -o wide

查看证书是否挂载成功

[root@master231 ~]# kubectl -n monitoring exec prometheus-k8s-0 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

[root@master231 ~]# kubectl -n monitoring exec prometheus-k8s-1 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

编写资源清单

[root@master231 servicemonitors]# cat 01-smon-svc-etcd.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: kube-system

labels:

apps: etcd

spec:

selector:

component: etcd

ports:

- name: https-metrics

port: 2379

targetPort: 2379

type: ClusterIP

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: smon-etcd

namespace: monitoring

spec:

# 指定job的标签,可以不设置。

jobLabel: kubeadm-etcd-k8s

# 指定监控后端目标的策略

endpoints:

# 监控数据抓取的时间间隔

- interval: 3s

# 指定metrics端口,这个port对应Services.spec.ports.name

port: https-metrics

# Metrics接口路径

path: /metrics

# Metrics接口的协议

scheme: https

# 指定用于连接etcd的证书文件

tlsConfig:

# 指定etcd的CA的证书文件

caFile: /etc/prometheus/secrets/etcd-tls/ca.crt

# 指定etcd的证书文件

certFile: /etc/prometheus/secrets/etcd-tls/server.crt

# 指定etcd的私钥文件

keyFile: /etc/prometheus/secrets/etcd-tls/server.key

# 关闭证书校验,毕竟咱们是自建的证书,而非官方授权的证书文件。

insecureSkipVerify: true

# 监控目标Service所在的命名空间

namespaceSelector:

matchNames:

- kube-system

# 监控目标Service目标的标签。

selector:

# 注意,这个标签要和etcd的service的标签保持一致哟

matchLabels:

apps: etcd

[root@master231 servicemonitors]#

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl apply -f 01-smon-svc-etcd.yaml

service/etcd-k8s created

servicemonitor.monitoring.coreos.com/smon-etcd created

[root@master231 servicemonitors]#

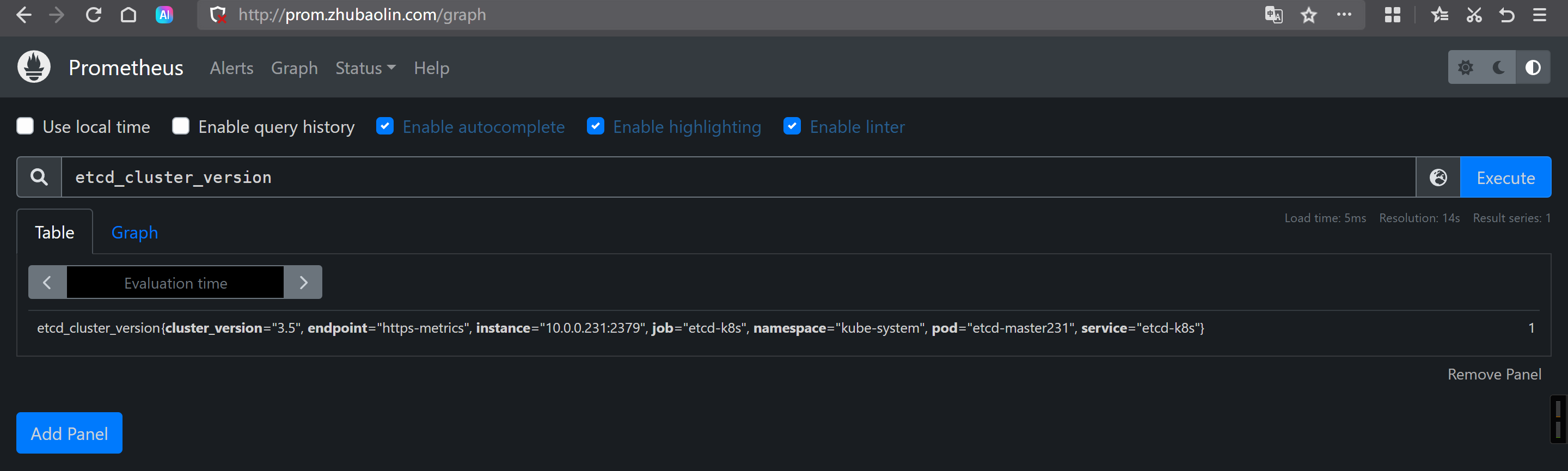

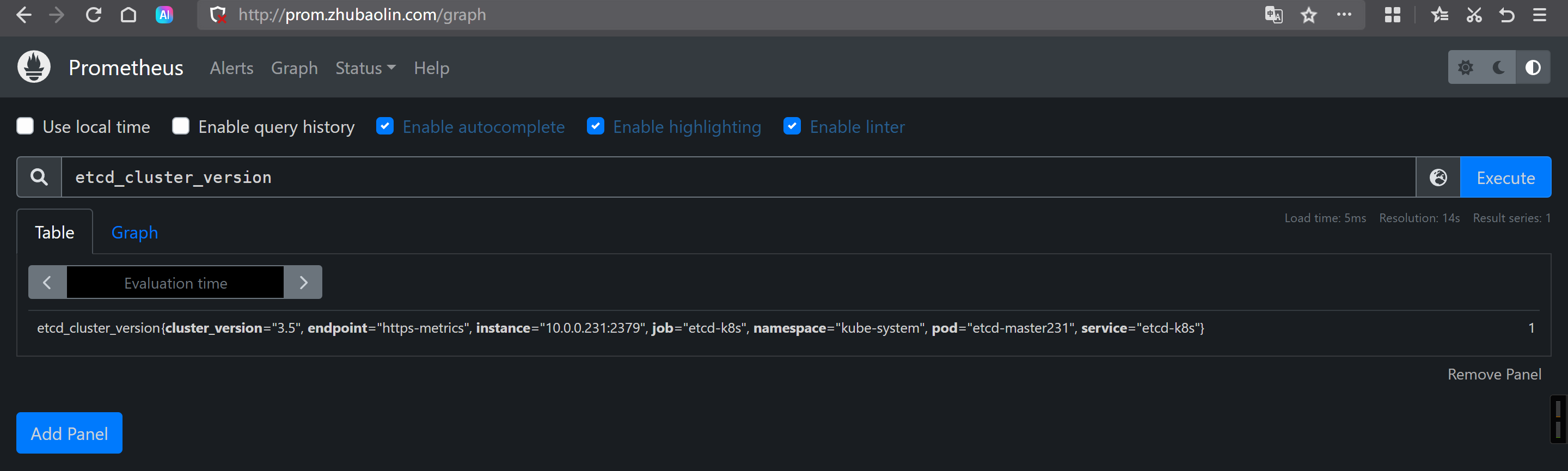

Prometheus查看数据

etcd_cluster_version

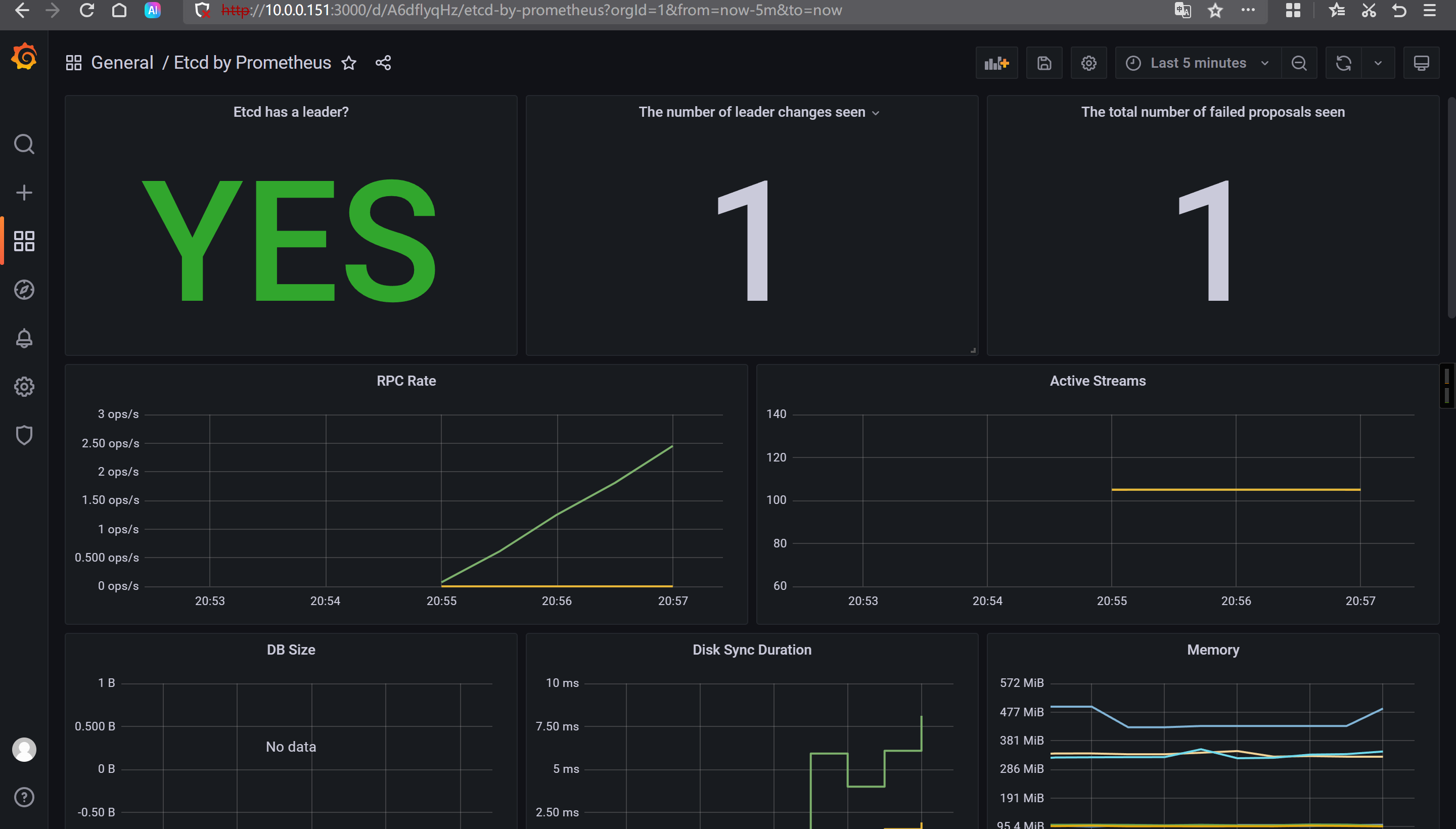

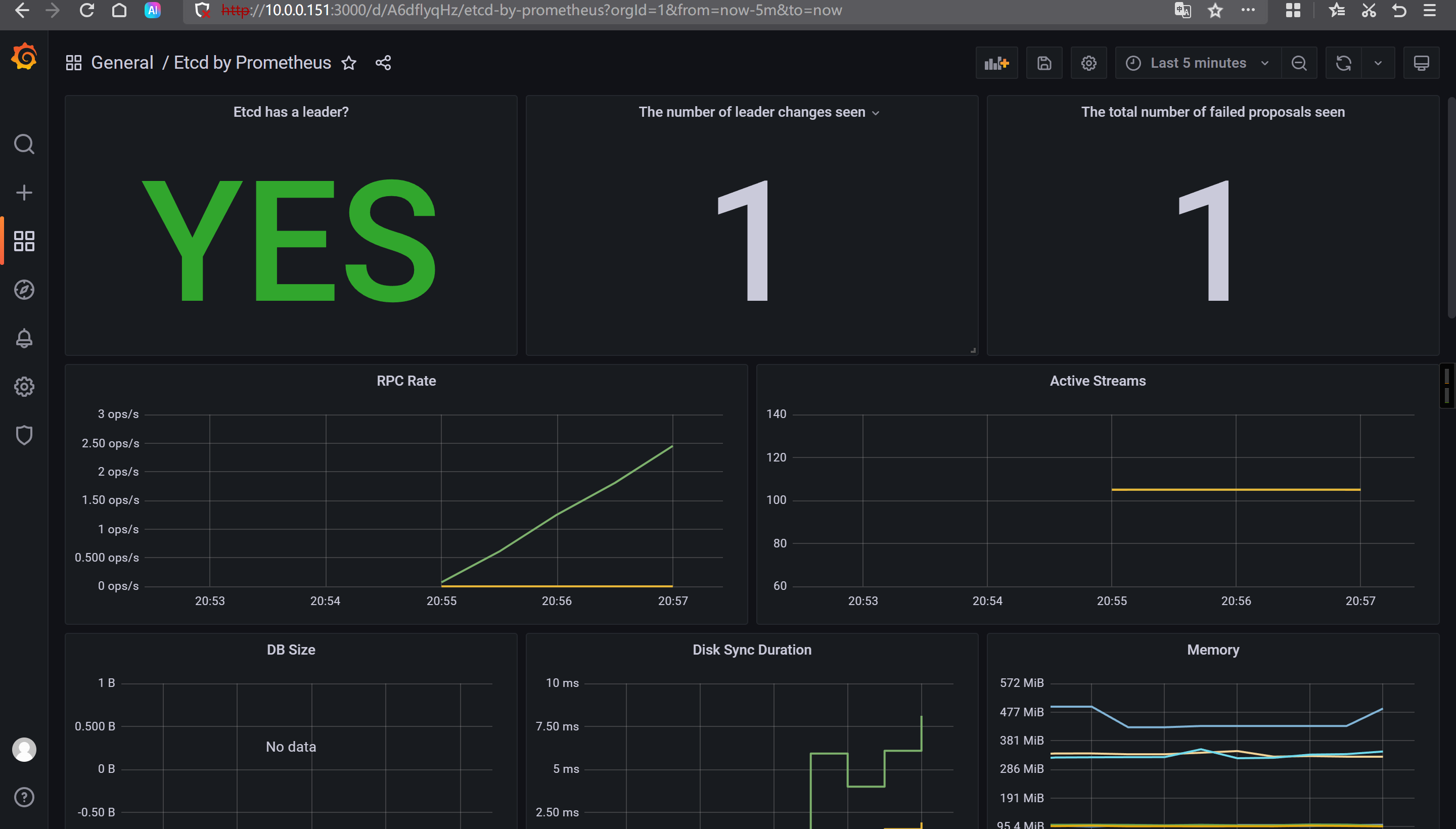

Grafana导入模板

3070

🌟Prometheus监控非云原生应用MySQL

编写资源清单

[root@master231 servicemonitors]# cat > 02-smon-mysqld.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql80-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql80

template:

metadata:

labels:

apps: mysql80

spec:

containers:

- name: mysql

image: harbor250.zhubl.xyz/zhubl-db/mysql:8.0.36-oracle

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: zhubaolin

- name: MYSQL_USER

value: zhu

- name: MYSQL_PASSWORD

value: "zhu"

---

apiVersion: v1

kind: Service

metadata:

name: mysql80-service

spec:

selector:

apps: mysql80

ports:

- protocol: TCP

port: 3306

targetPort: 3306

---

apiVersion: v1

kind: ConfigMap

metadata:

name: my.cnf

data:

.my.cnf: |-

[client]

user = zhu

password = zhu

[client.servers]

user = zhu

password = zhu

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-exporter-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql-exporter

template:

metadata:

labels:

apps: mysql-exporter

spec:

volumes:

- name: data

configMap:

name: my.cnf

items:

- key: .my.cnf

path: .my.cnf

containers:

- name: mysql-exporter

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/mysqld-exporter:v0.15.1

command:

- mysqld_exporter

- --config.my-cnf=/root/my.cnf

- --mysqld.address=mysql80-service.default.svc.zhubl.xyz:3306

securityContext:

runAsUser: 0

ports:

- containerPort: 9104

#env:

#- name: DATA_SOURCE_NAME

# value: mysql_exporter:zhubaolin@(mysql80-service.default.svc.zhubl.xyz:3306)

volumeMounts:

- name: data

mountPath: /root/my.cnf

subPath: .my.cnf

---

apiVersion: v1

kind: Service

metadata:

name: mysql-exporter-service

labels:

apps: mysqld

spec:

selector:

apps: mysql-exporter

ports:

- protocol: TCP

port: 9104

targetPort: 9104

name: mysql80

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: mysql-smon

spec:

jobLabel: kubeadm-mysql-k8s

endpoints:

- interval: 3s

# 这里的端口可以写svc的端口号,也可以写svc的名称。

# 但我推荐写svc端口名称,这样svc就算修改了端口号,只要不修改svc端口的名称,那么我们此处就不用再次修改。

# port: 9104

port: mysql80

path: /metrics

scheme: http

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

apps: mysqld

EOF

[root@master231 servicemonitors]# kubectl apply -f 02-smon-mysqld.yaml

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl get pods -o wide -l "apps in (mysql80,mysql-exporter)"

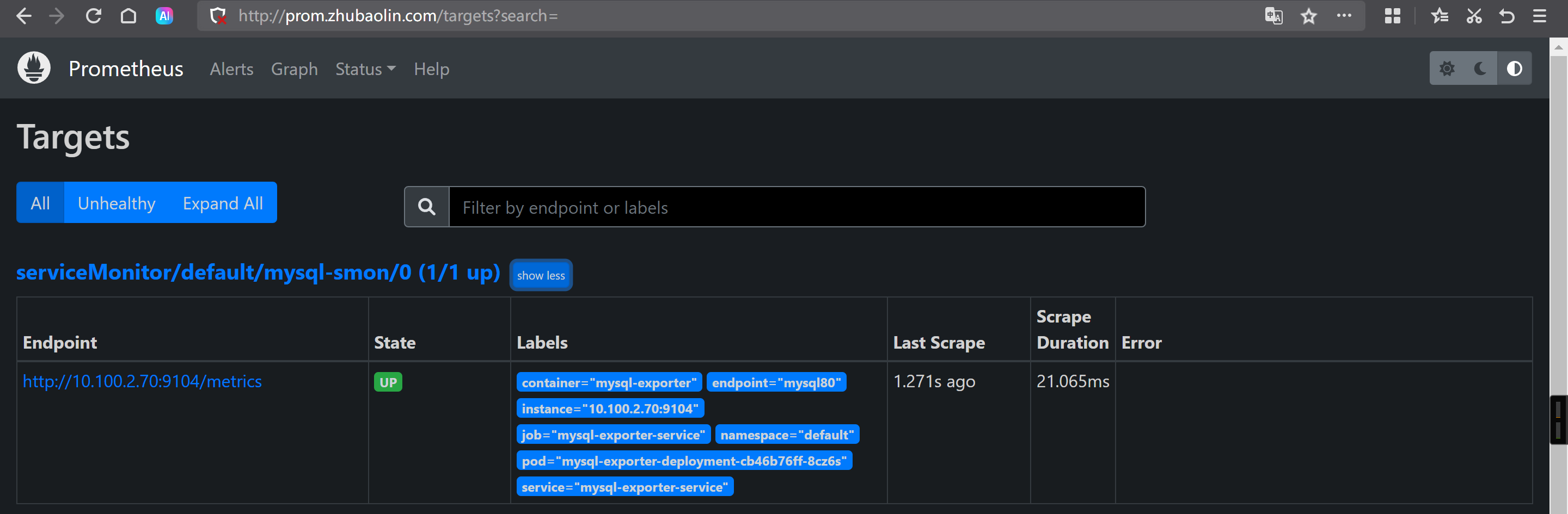

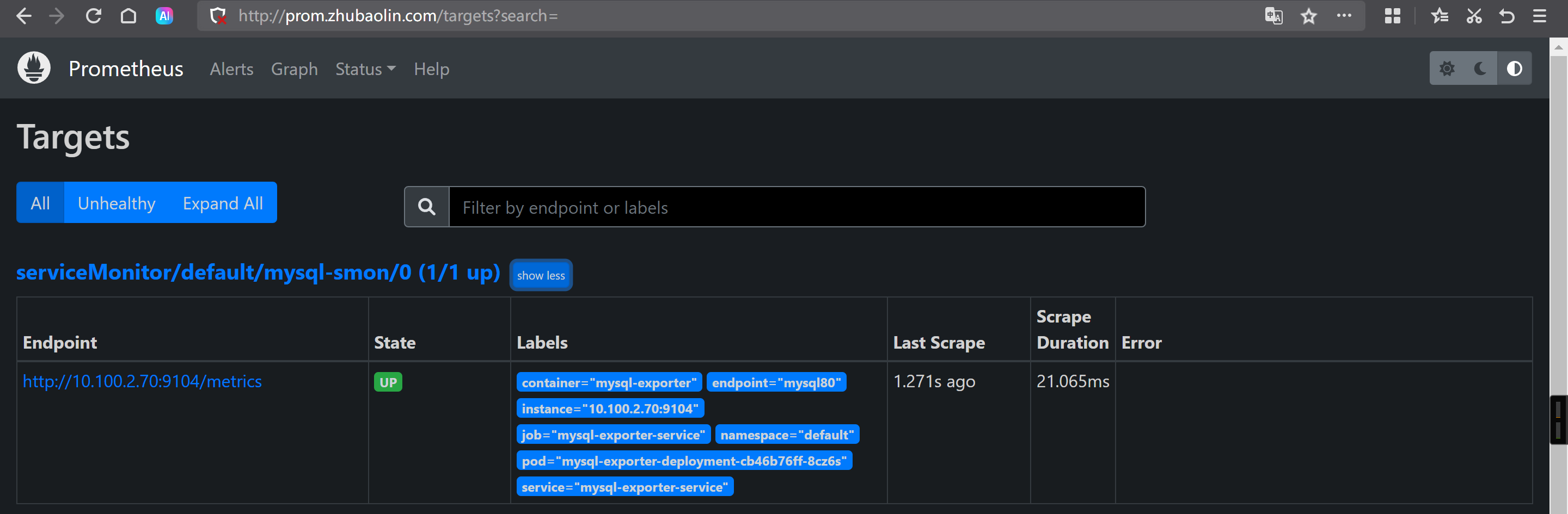

Prometheus访问测试

mysql_up[30s]

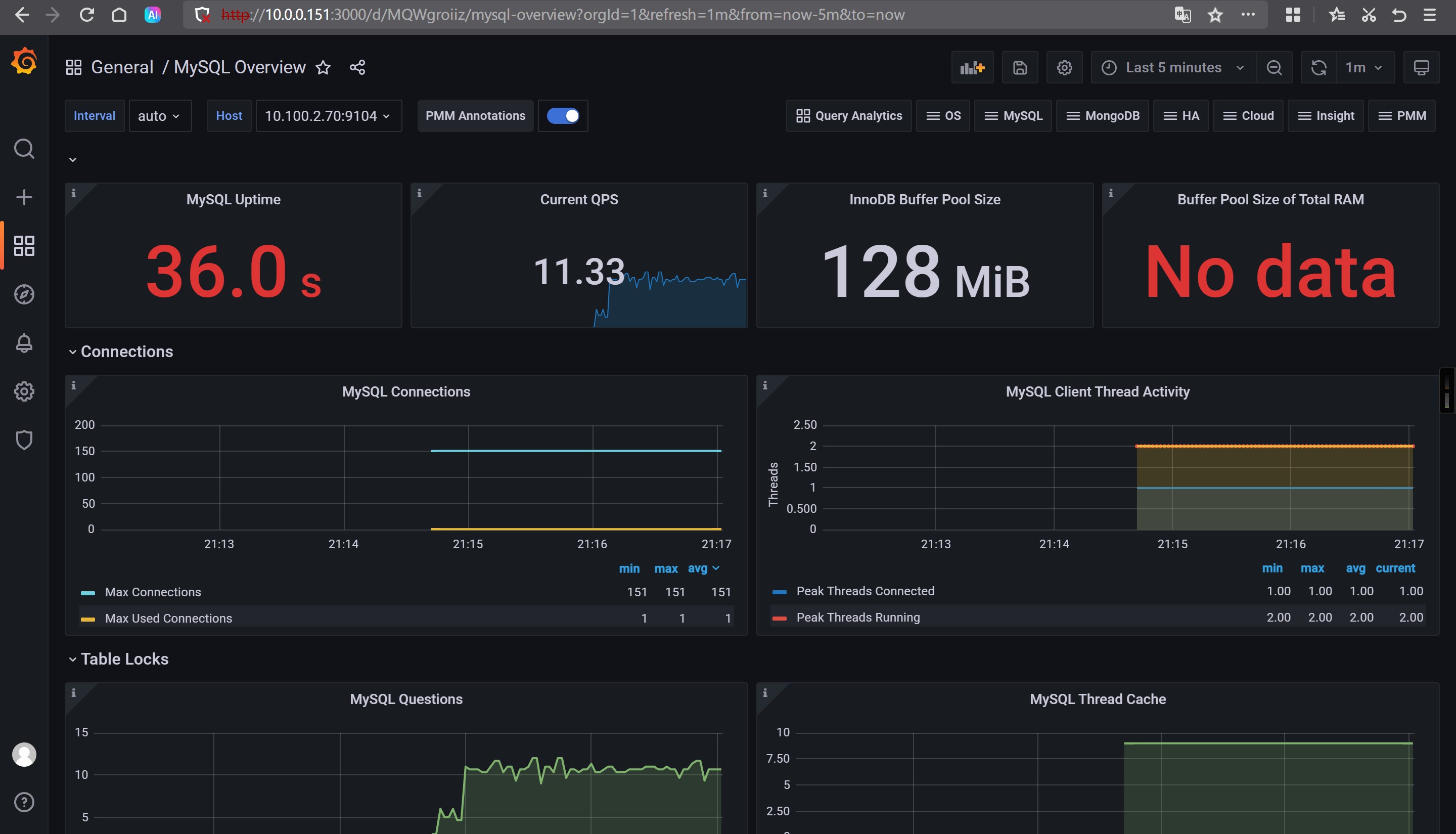

Grafana导入模板

7362

14057

17320